To print this article, all you need is to be registered or login on Mondaq.com.

Overview

Artificial intelligence (“AI“)

systems, which have recently become increasingly widespread in

almost every sector, are machine-based computer systems designed to

operate with varying levels of autonomy and demonstrate

adaptability after deployment. AI systems process inputs to achieve

explicit or implicit goals and produce outputs such as predictions,

content, recommendations or decisions that can affect physical or

virtual environments.

The European Union (“EU“) Artificial

Intelligence Act (“AI Act“), the

world’s first comprehensive legislation aimed at regulating the

sector, was adopted by the European Council on May 21, 2024 after

long periods of negotiation and legislative preparation and entered

into force (directly for EU member states) on August 1, 2024.

Harmonization transition periods are envisaged to last two years

and will vary according to associated risk levels. AI systems

presenting an “unacceptable risk” will be banned within 6

months of the Act’s implementation, while certain obligations

for “General Purpose Artificial Intelligence”

(“GPAI“) systems, as defined below, will

apply after 12 months. Implementation of provisions for high-risk

AI systems will begin on August 2, 2027.

The AI Act covers all stages of “AI systems, from

development to use and aims, to ensure AI is a regulated and

trusted mechanism and to encourage investment and innovation in the

field. Within the framework of the AI value chain1 , it

applies to providers (developers and companies instructing

development), importers, distributors, manufacturers and

deployers of certain types of artificial intelligence systems.

The AI Act’s obligations vary according to roles played in

the AI value chain with a focus on providers, distributors,

importers and manufacturers of AI systems. Depending on

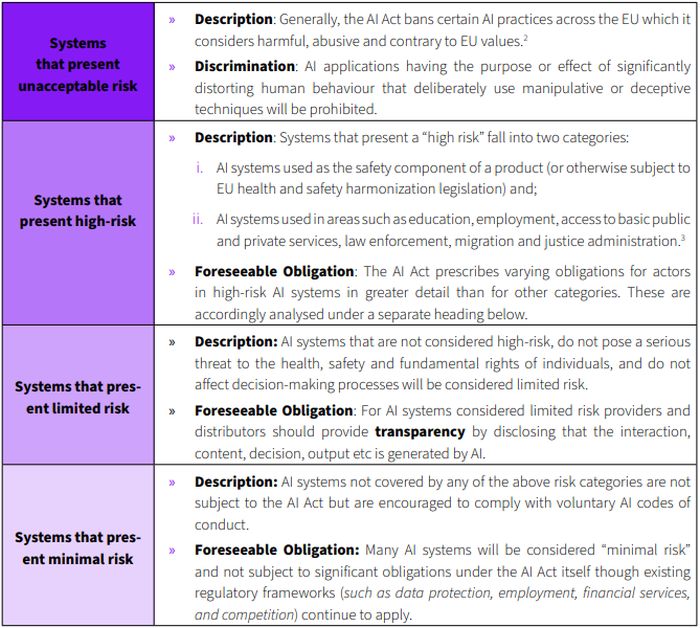

the level of threat posed to humans, AI systems are categorized as

presenting “unacceptable risk“,

“high-risk” and “limited

risk“. Violations are subject to fines at the following

scales: (i) €35,000,000 or 7% of worldwide annual turnover

(unacceptable risk); (ii) €15,000,000 or 3% of worldwide

annual turnover (high risk); and (iii) €7,500,000 or 1% of

worldwide annual turnover (limited risk).

Who are the actors under the new Act?

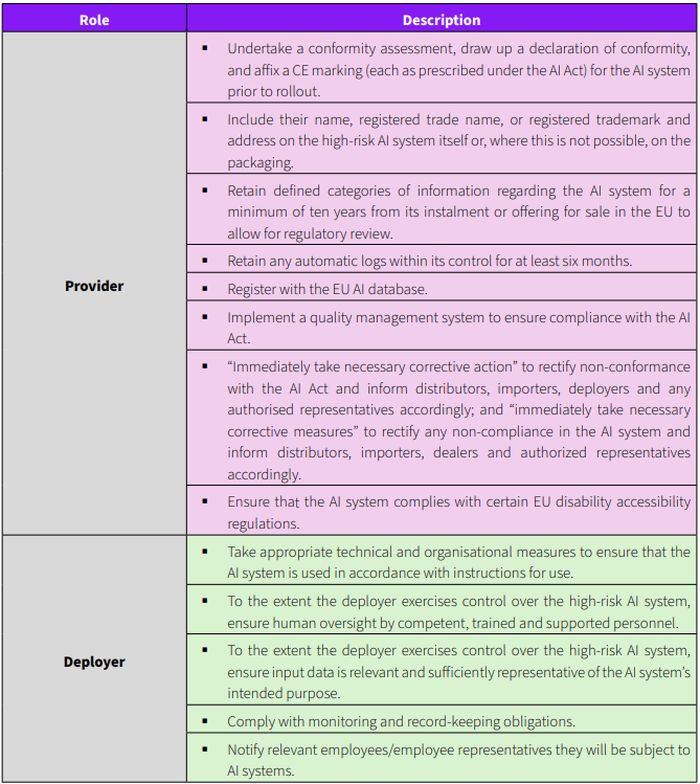

Obligations under the new law will differ according to the

following roles in the “AI value chain”, defined by the

Act as “operators”, with most falling on providers of AI

systems:

The European Commission will delegate its powers for effective

implementation of AI Act obligations to the AI office to be

established (without prejudice to agreements between EU Member

States and the EU) which will take all actions necessary for

effective implementation.

Are only EU companies affected?

The AI Act will employ extra-territorial jurisdiction similar to

the European Union’s General Data Protection Regulation

(“GDPR”):

- Businesses supplying AI systems within the EU (regardless of

whether they are established in the EU or not) and; - Businesses located outside of the EU if the output of their AI

system is used within the EU

The AI Act imposes direct obligations on providers

(developers of AI systems), deployers, importers,

distributors and manufacturers of AI systems operating within the

EU in connection with the EU market but also applies

extraterritorially to non-EU companies. Therefore, providers must

comply with its provisions when placing their AI systems or GPAI

models on the market, or putting them into service in the EU,

regardless of their location. Importers, distributors and

manufacturers serving the EU market will also be affected:

- providers placing or servicing AI systems in the EU market or

placing GPAI models in the EU market; - deployers of AI systems who have a place of establishment or

are located in the EU and; - providers and deployers of AI systems in non-EU countries where

the output produced by the AI system is being used in the EU.

What are the risk levels?

In order to implement the Act’s provisions proportionately

and effectively, a risk-based approach has been adopted under which

AI systems have been categorized according to the scope of the

risks each may pose.

Obligations for High-Risk AI Systems

The AI Act imposes a wide range of obligations on providers for

AI systems deemed to be “high risk” including risk

assessments; maintaining governance and documentation; public

registration and compliance assessments; and prevention of

unauthorized modifications.

Deployers are required to comply with significantly more limited

obligations such as implementation of technical and organizational

measures to comply with usage restrictions and ensuring competent

human oversight.

High-risk AI systems must also undergo a conformity assessment

before being placed on the market or put into service. This process

includes the establishment of a monitoring system and reporting of

serious incidents to market surveillance authorities.

They are also subject to comprehensive compliance requirements

covering seven main areas:

- Risk management systems. These must be

“established, implemented, documented and maintained”.

Such systems must be developed iteratively and planned and run

throughout the system’s entire lifecycle while subject to

systematic review and updates. - Data governance and management

practices. Training, validation and testing data sets

for highrisk AI systems must be subject to appropriate data

governance and management practices. The AI Act sets out specific

practices which must be considered including data collection

processes and appropriate measures to detect, prevent and mitigate

biases. - Technical documentation. A requirement to

draw up relevant technical documentation before a high-risk AI

system is placed on the market or “put into service”

(i.e. installed for use) and ensuring it is kept up to date. - Record keeping/logging. High-risk AI

systems must “technically allow for” recording of

automatic logs over the lifetime of the system to ensure system

functioning maintains an appropriate level of traceability for its

intended purpose. - Transparency / provision of information to

deployers. High-risk AI systems must be designed and

developed to ensure sufficient transparency to allow deployers to

interpret output and utilise it appropriately. Instructions for use

must be provided in a format appropriate for the AI system. - Human oversight. “Natural persons”

must oversee high-risk AI systems with a view to preventing or

minimising risks to health, safety, or the fundamental freedoms

that may arise from their use. - Accuracy, robustness and cybersecurity.

High-risk AI systems must be designed and developed to achieve an

appropriate level of accuracy, robustness and cyber security and to

perform in accordance with these levels throughout their

lifecycle.

Providers based outside the EU

are required to appoint an authorized representative based in the

EU.

Providers must ensure their high-risk AI systems comply with the

requirements set out above and must demonstrate compliance when

reasonably requested to do so by a national competent regulator

(providing information that is reasonably requested).

Providers and deployers of high-risk AI systems

must also:

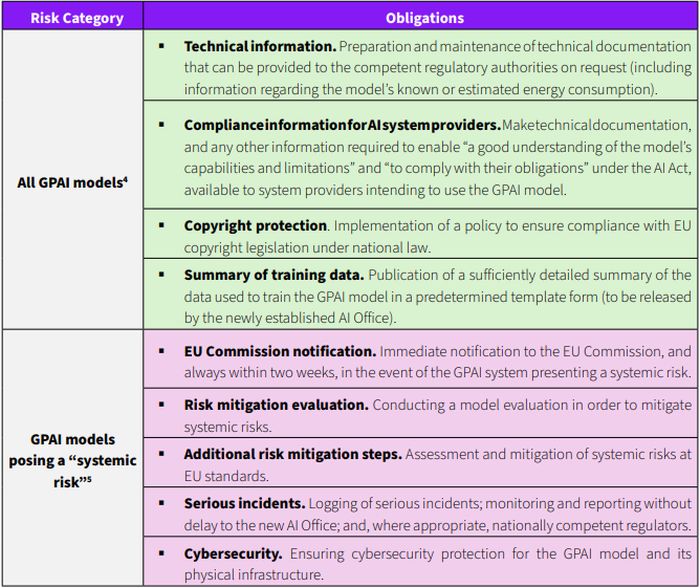

General Purpose Artificial Intelligence (GPAI) Models

The AI Act sets out a specific section for the classification

and regulation of GPAI models which are defined as “AI

model(s) that exhibit significant generality, including when

trained with large amounts of data using large-scale

self-supervision, and capable of competently performing a wide

range of tasks, regardless of how the model is released, and which

can be integrated into various downstream systems or applications

(with the exception of AI models used for research, development or

prototyping activities prior to release)“.

Though GPAI models are essential components of AI systems, they

cannot constitute AI systems on a standalone basis. Examples

include “models that can be released in various forms

including libraries, application programming interfaces (APIs),

direct download or physical copy“.

Fines

Significant fines will be imposed on businesses which contravene

the new legislation. Similar to the GDPR, these will be capped at

a percentage of the previous financial year’s global

annual turnover or a fixed amount

(whichever is higher):

- €35 million or 7% of global annual turnover for

non-compliance with prohibited AI system rules; - €15 million or 3% of global annual turnover for violations

of other obligations and; - €7.5 million or 1% of global annual turnover for supplying

incorrect, incomplete or misleading information required under the

AI Act.

The AI Act states that penalties will be effective, act as a

deterrent, and proportionate. Accordingly, the circumstances and

economic viability of SMEs and start-ups will be considered.

Footnotes

1. The general name given to transactions related to

services or products offered by an entity or type of

activity.

2. For example, AI systems presenting unacceptable

risks to ethical values, such as emotion recognition systems or

inappropriate use of social scoring, are prohibited.

3. Systems where intended use is limited to (i)

performing narrow procedural tasks; (ii) making improvements to the

results of previously completed human activities; (iii) detecting

decision-making patterns or deviations from previous

decision-making patterns without changing or influencing human

judgments; and (iv) performing only preparatory tasks for risk

assessment.

4. The general transparency requirements, as they

apply to all GPAI models, impose various obligations on GPAI model

providers.

5. GPAI models will be considered to pose a

“systemic” risk where they are “widely

diffusible” and have a “high impact” along the AI

value chain. These are subject to obligations in addition to the

transparency requirements above.

The content of this article is intended to provide a general

guide to the subject matter. Specialist advice should be sought

about your specific circumstances.